Hiring developers with strong problem-solving skills is essential. Beyond coding, these skills determine their ability to tackle challenges like debugging, designing scalable systems, and meeting deadlines. Employers prioritize this trait, with 91% considering it a top factor in hiring decisions.

To assess problem-solving effectively, use developer assessment tools that focus on three core areas: technical skills (coding, debugging, algorithms), analytical thinking (breaking down problems, weighing trade-offs), and communication (explaining solutions, collaborating). Realistic scenarios tied to the job role, open-ended tasks, and calibrated difficulty levels ensure assessments reflect actual work challenges.

Key takeaways include:

- Realistic tasks: Focus on job-specific challenges, not abstract puzzles. You can also assess technical skills without coding tests by using portfolio reviews and pair programming.

- Process over results: Evaluate how candidates approach problems, not just the final solution.

- Communication: Test their ability to explain and adapt during feedback.

- Scoring rubrics: Use measurable criteria to reduce bias and ensure consistency.

Designing Realistic Problem Scenarios

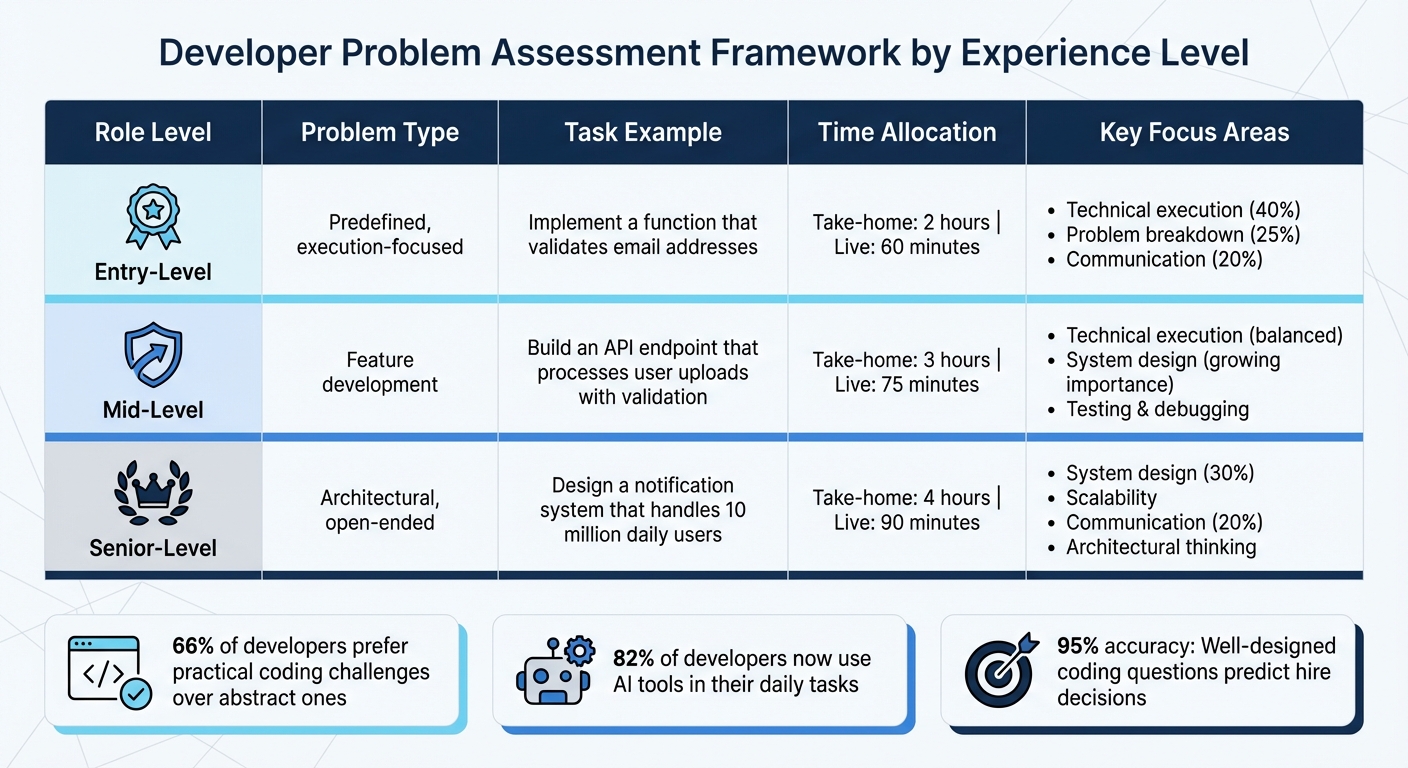

::: @figure  {Developer Problem Assessment Framework by Experience Level}

{Developer Problem Assessment Framework by Experience Level}

Creating interview problems that reflect real-world tasks is key to assessing candidates effectively. Instead of relying on abstract algorithmic puzzles, focus on scenarios that align with your company's tech stack, business needs, and daily operations. Research indicates that 66% of developers prefer practical coding challenges over abstract ones. These realistic challenges provide a better window into a candidate's abilities.

Start by defining what success in the role looks like within the first 90 days. For example, a backend developer at a fintech company might need to build secure API endpoints for payment processing. Meanwhile, a frontend developer at a gaming studio could be tasked with creating UI components capable of handling real-time updates. Isabelle Fahey, Head of Growth at CloudDevs, emphasizes:

"A well-designed developer skills assessment is more than just a test; it's a preview of the collaborative and problem-solving environment a candidate can expect if they join your team."

Tailoring problems to reflect the specific challenges of the role not only helps in evaluation but also gives candidates a sense of what the job entails.

Matching Problems to the Job Role

Effective assessments focus on job-specific skills. For instance, if you're hiring someone to maintain legacy systems, a great task might involve reviewing a pull request with deliberate bugs or inefficient logic. This not only tests technical expertise but also gauges communication skills.

Let candidates use tools they’re comfortable with, like VS Code or Jira, to mimic real-world workflows. With 82% of developers now using AI tools in their daily tasks, consider how candidates validate and enhance AI-generated code as part of the evaluation.

Before crafting your problems, map out a competency blueprint to distinguish between "must-have" and "nice-to-have" skills. For example:

- Backend roles: Strong SQL/PostgreSQL skills are essential; familiarity with GraphQL is a bonus.

- Senior positions: Include architectural challenges, such as designing a high-load application or refactoring legacy code, to assess system-level thinking.

This tailored approach ensures that your assessments align with the role's unique demands.

Setting the Right Difficulty Level

The complexity of your problems should match the candidate's experience level. For entry-level developers, tasks might involve implementing a basic algorithm or writing a simple function. Mid-level candidates, on the other hand, could tackle feature development, like adding an API endpoint with business logic. Senior engineers should face open-ended architectural problems that require scalability and strategic decision-making.

To ensure your problems are fair and realistic, test them with engineers at similar levels. This helps you calibrate the difficulty, estimate completion times, and fine-tune hints. A well-designed problem should fit within the interview timeframe while still reflecting real-world challenges. For take-home projects, aim for tasks that take 2 to 4 hours, while live sessions should last 60 to 90 minutes.

Once calibrated, you can further challenge candidates with open-ended tasks that test their depth and adaptability.

Using Open-Ended Problems

Open-ended problems are excellent for evaluating how candidates think through trade-offs and articulate their reasoning - skills that are critical in production environments.

Structure these tasks in layers. Begin with a straightforward requirement, then introduce constraints (e.g., scaling data from 10 to 1 million records) to assess adaptability. This approach moves beyond rote memorization and highlights a candidate's ability to handle complexity. It’s particularly effective for system design and scalability assessments.

For senior roles, system design questions work especially well. For example, ask candidates to design a service, like a ride-sharing platform or a URL shortening system. Focus on scalability, storage, and reliability rather than perfect implementation. Ambiguous requirements can also prompt candidates to ask clarifying questions, simulating real-life interactions with product managers and stakeholders.

| Role Level | Problem Type | Task Example |

|---|---|---|

| Entry-Level | Predefined, execution-focused | Implement a function that validates email addresses |

| Mid-Level | Feature development | Build an API endpoint that processes user uploads with validation |

| Senior-Level | Architectural, open-ended | Design a notification system that handles 10 million daily users |

Evaluating Solution Approaches and Execution

When a candidate begins tackling your problem, shift your attention from the final product to the process they use to build it. This phase is all about understanding how well they can transform ideas into functional code while making sound technical choices under pressure. It’s also where you distinguish between developers who rely on memorized patterns and those who truly grasp the principles of their craft.

Reviewing Algorithm and Data Structure Choices

Pay close attention to whether the candidate delivers a solution that’s not only correct but also efficient and meets the problem’s core requirements. Look at their ability to select the right data structures - like choosing a Hash Map for O(1) lookups - and how they weigh the trade-offs of their decisions. When candidates explain their reasoning behind choosing a particular algorithm or framework, they’re showcasing the kind of critical thinking that’s crucial in real-world development.

Strong candidates have a knack for breaking down complex problems into smaller, manageable parts while focusing on what’s essential. For roles that involve scaling, explore how their solutions would adapt when moving from, say, 1,000 users to 10 million.

"Collaboration and communication skills are often just as important as technical depth. While testing candidates on their CS staples such as algorithms, data structures, and language proficiency, there is certainly more to a well-rounded candidate."

Once algorithmic reasoning is established, it’s time to dive into the quality of their code and their debugging approach.

Assessing Code Quality and Debugging

After evaluating their algorithmic decisions, shift to how they implement those ideas in code. Does their code reflect clarity, modularity, and readiness for production? Look for practices like writing reusable functions, proper documentation, and a structure that supports long-term maintainability.

You can also gauge their debugging skills by observing how they identify and fix intentional errors under time constraints. This mirrors the reality of a developer’s day-to-day, where debugging is a constant task. In fact, software engineers spend an average of 9.4 hours per week writing and refining code, often as part of a team.

If you’re reviewing GitHub portfolios, consistency in coding style, organization, and documentation can be a strong indicator of someone who values quality, even when no one is looking over their shoulder.

Evaluating System Design and Scalability

For senior candidates, it’s essential to assess their ability to design systems that are both scalable and maintainable. This involves breaking down large systems into smaller, manageable components while balancing factors like latency, scalability, and maintainability. A strong candidate will analyze trade-offs between different architectural options and justify their choices based on the problem’s specific constraints.

Look for their ability to spot performance bottlenecks and suggest optimizations like indexing or caching. When discussing system design, they should be able to estimate capacity needs - such as user load, storage demands, and delivery speeds - rather than giving vague answers about scaling.

Team CodeSignal highlights this shift in focus:

"System design interviews have evolved beyond drawing boxes and arrows... The focus shifts from memorizing system patterns to demonstrating problem-solving methodology and technical communication."

Finally, evaluate how candidates handle legacy system integration and whether they ask clarifying questions when requirements are unclear. Their problem-solving process and ability to communicate technical ideas effectively are far more valuable than simply recalling system design patterns from memory.

Testing Communication and Collaboration Skills

Technical expertise alone doesn’t cut it - clear communication and effective teamwork are just as important. According to the 2025 HackerRank Developer Skills Report, which analyzed data from 26 million developers and over 3 million assessments, communication plays a key role in skills-first hiring . With 81% of organizations struggling to address tech skills gaps , it’s clear that candidates need to not only solve problems but also explain their approach effectively, aligning with what developers want from the hiring process.

While technical assessments measure problem-solving ability, evaluating how candidates articulate their reasoning and collaborate with others is equally essential. This section explores practical ways to assess these skills during interviews.

Conducting Live Coding or Pair Programming

Live coding sessions are a great way to observe how candidates think on their feet and work with others. Instead of assigning rigid tasks with one correct answer, use open-ended scenarios that encourage discussion. Paige Schwartz from CodeSignal advises creating:

"less prescriptive scenarios where, like the real world, there are a myriad of ways to approach the problem" .

These scenarios push candidates to ask clarifying questions and engage in problem-solving, just as they would in a real-world team setting.

Pairing candidates with current team members can provide a window into their real-time collaboration skills. Observe how they debug code together and whether they use inclusive language like "we", which reflects a team-oriented mindset. Start with an easy warm-up task, then gradually increase complexity - such as introducing a new constraint mid-task - to see how well they adapt while explaining their reasoning . Pay attention to how they justify their technical decisions, as this often reveals their ability to collaborate effectively.

Evaluating Explanations of Design Choices

Encourage candidates to restate the problem before diving into code to ensure they’ve fully understood it . As they work, ask them to explain their thought process, including trade-offs, data structure decisions, and alternative approaches.

To test their ability to think ahead, pose questions like, "How would this solution handle a data set of 1 million records?" This helps gauge their awareness of scalability and performance concerns . As Adrien Fabre, Server Software Engineer at Dashlane, aptly puts it:

"In a team poor communication skills is a big problem because as soon as you start working with another person, your job is not to only code but to also share ideas and thoughts with your coworkers" .

Testing Response to Feedback

How candidates handle feedback is a strong indicator of their teamwork potential. During an iterative review, provide constructive criticism or introduce new constraints to see how well they adjust their solutions on the spot . Strong candidates will ask clarifying questions, weigh trade-offs, and adapt without becoming defensive or dismissive.

You can also simulate a pull request review by giving candidates a flawed piece of code and asking for their feedback. This approach highlights whether their communication style is constructive and analytical . Look for candidates who are comfortable admitting knowledge gaps - for example, saying, "I’m not sure about this approach, can we discuss it?" demonstrates openness and a willingness to learn. Developers who can navigate these conversations with transparency and respect are invaluable team players.

Hiring engineers?

Connect with developers where they actually hang out. No cold outreach, just real conversations.

Creating a Scoring Rubric

A scoring rubric transforms subjective impressions into measurable data by focusing on specific, observable behaviors instead of relying on gut feelings. This approach helps reduce bias, which is a significant issue - 65% of technical recruiters report bias in their current hiring processes . Structured rubrics are designed to tackle this problem directly.

The core of a good rubric is translating abstract skills into clear, measurable actions. For example, instead of vaguely assessing "problem-solving", your rubric might evaluate specific behaviors like clarifying scope before coding versus jumping straight into implementation . This ensures every interviewer uses the same consistent framework to evaluate candidates.

Defining Evaluation Criteria

The first step is to identify the key competencies that matter for the role you're hiring for. Building on the earlier discussion of technical execution, analytical thinking, and communication, you’ll need to define how these skills manifest in practice. Here are some examples:

- Problem Decomposition: Breaking complex tasks into manageable steps.

- Technical Execution: Writing correct, fluent, and idiomatic code.

- System Design: Creating scalable abstractions and ensuring separation of concerns.

- Communication: Clearly explaining trade-offs and design decisions.

- Testing and Debugging: Addressing edge cases and resolving issues independently .

As the Karat Team aptly puts it:

"If a competency is on the interview rubric it's signal. If it's not, then it's noise" .

This means you should ignore irrelevant factors like filler words, nervousness, or knowledge of minor cultural details that can be learned quickly. Focus exclusively on the skills that truly matter for the job.

Once your evaluation criteria are clearly defined, the next step is assigning appropriate weights to each competency.

Assigning Weighted Scores

The importance of each criterion will vary depending on the role and level of seniority. For junior developers, the focus is often on technical execution and code correctness, as these are foundational skills. For senior roles, greater emphasis should be placed on system design, architectural thinking, and testing .

Weighting should also align with the specific requirements of the role. For instance, a payments team may prioritize expertise in API security, while a frontend team might value knowledge of state management patterns . Isabelle Fahey, Head of Growth at CloudDevs, highlights the importance of this approach:

"A well-defined competency blueprint is your single source of truth. It prevents you from testing for trendy but irrelevant skills" .

To ensure consistency, run calibration sessions where interviewers independently score a sample submission and then discuss discrepancies. This process helps align expectations on how weights and scores should be applied .

Sample Scoring Table

Here’s an example of how weights might vary by role level:

| Scoring Category | Weight (Junior) | Weight (Senior) | Evaluation Focus |

|---|---|---|---|

| Problem Breakdown | 25% | 15% | Ability to decompose tasks and clarify scope. |

| Technical Execution | 40% | 20% | Code correctness, fluency, and idiomatic use. |

| System Design | 5% | 30% | Abstractions, scalability, and separation of concerns. |

| Communication | 20% | 20% | Explaining trade-offs and design choices clearly. |

| Testing & Debugging | 10% | 15% | Identifying edge cases and fixing bugs independently. |

Use a consistent 1–5 rating scale to evaluate each category, with each number tied to specific behaviors. For instance, a "5" in problem-solving might indicate: "Clarifies scope first, outlines multiple approaches with pros and cons, and handles all edge cases independently." In contrast, a "3" might mean: "Implements a basic solution independently but struggles with optimization or edge cases" .

This structured rubric creates a unified evaluation process, ensuring consistency across technical, design, and communication assessments, as outlined earlier.

Using Tools to Streamline Assessments

Once you’ve set up a structured rubric, the next step is to simplify your assessment process with the right tools. The right platforms can help automate early-stage filtering, bring consistency to evaluations, and connect you with developers who are already engaged and open to new roles. Let’s break down how leveraging specialized tools can make this process more efficient.

How daily.dev Recruiter Helps You Find Developers

Hiring developers who are both technically skilled and interested in new opportunities is no easy task. In fact, 74% of companies report difficulties in finding qualified technical talent . Traditional sourcing methods often rely on cold outreach, which many developers either ignore or actively dislike. daily.dev Recruiter takes a different approach, connecting recruiters with passive, pre-qualified developers who are already part of an active professional network.

Here’s how it works: every introduction on daily.dev Recruiter is warm, double opt-in, and highly relevant. Developers receive targeted role descriptions that align with their skills and interests, and they decide whether to engage. This means recruiters only interact with candidates who are genuinely interested in the role, eliminating the noise of cold emails and endless screening calls. Instead, the process focuses on trust and intent, creating meaningful connections with developers who are actively engaged in their professional growth.

This method doesn’t just make sourcing easier - it also improves the quality of your assessments. By starting with pre-qualified candidates, you can spend less time filtering out unfit applicants and more time validating the specific skills that matter for the role. And when paired with technical assessment platforms, the entire evaluation process becomes even more refined.

Using Technical Assessment Platforms to Evaluate Skills

Technical assessment platforms are a game-changer for evaluating candidates’ abilities. These tools deliver standardized coding challenges tailored to your tech stack, serving as a first-pass filter to identify qualified technical candidates with strong foundational skills before moving to live interviews . In fact, a well-designed coding question can predict a "Hire" decision with about 95% accuracy compared to a full interview loop .

To get the most out of these platforms, design assessments that reflect real-world work environments. Candidates should be able to use tools like VS Code, access relevant libraries, and work in conditions that mimic the job they’re applying for . It’s also crucial to calibrate these challenges internally with a sample group to ensure the difficulty and timing are realistic . Poorly designed assessments can unintentionally filter out great candidates.

Another key point: keep assessments appropriately scoped. Developers, especially those sourced through platforms like daily.dev Recruiter, are unlikely to complete lengthy or overly complex challenges that feel like unpaid work. As Isabelle Fahey, Head of Growth at CloudDevs, explains:

"A well-designed developer skills assessment is more than just a test; it's a preview of the collaborative and problem-solving environment a candidate can expect if they join your team" .

Finally, make sure your assessment platform integrates seamlessly with your Applicant Tracking System (ATS). This integration allows candidate data and assessment results to flow directly into your scoring rubric, making it easier to objectively compare applicants and move top performers through the hiring process quickly .

Conclusion

Using a structured checklist to evaluate developer problem-solving skills turns hiring into a more objective and evidence-driven process. By setting clear criteria upfront - whether it’s focusing on code quality, debugging techniques, or system design —while also assessing soft skills - you bring consistency to interviews and eliminate the uncertainty of subjective evaluations. This approach not only strengthens how you vet technical skills but also aligns with industry trends, which consistently highlight problem-solving as the most critical skill for developers, even surpassing expertise in specific programming languages .

The impact is measurable. Structured assessments significantly improve predictive accuracy, with well-crafted coding challenges aligning with final hiring decisions about 95% of the time . They also streamline the process by filtering out unqualified candidates early, saving time and resources. Plus, candidates appreciate the transparency and professionalism, which reflects positively on your engineering culture . These benefits create a strong foundation for integrating advanced recruitment tools.

Once you’ve established a solid assessment framework, leveraging the right sourcing platform can elevate your hiring strategy even further. daily.dev Recruiter takes this to the next level by connecting you with pre-qualified, motivated developers. Instead of relying on cold outreach or spending hours on screening calls, you start with warm, double opt-in introductions to candidates who are genuinely interested in engaging with your team. This allows you to focus your structured evaluation process on candidates who are already invested, improving efficiency and the overall quality of assessments.

FAQs

What are the best practices for creating effective coding challenges for developer interviews?

To craft coding challenges that truly assess a candidate's abilities, focus on tasks that mirror real-world scenarios relevant to the job. This could mean asking them to build a small feature, debug an issue, or design a REST API. Make sure the requirements are clear and goal-oriented - define the inputs and outputs, but leave the implementation details flexible. This approach allows you to evaluate their problem-solving and design skills.

Keep the scope realistic. Challenges should take no more than 30–60 minutes to complete. Include practical constraints, like error handling or performance considerations, to reflect actual production environments. To make the process smoother, consider offering starter code or a sandbox environment. This way, candidates can focus on solving the challenge rather than getting bogged down in setup.

When evaluating, look beyond just the solution. Pay attention to how the candidate communicates their approach. Clean, readable code paired with thoughtful explanations often says more about their skills than the final output alone.

Tools like daily.dev Recruiter can simplify the process by connecting you with pre-qualified developers who are already actively engaged in the field. This can make your interview process both more efficient and meaningful.

What should recruiters include in a scoring rubric to evaluate a developer's problem-solving skills?

A well-rounded scoring rubric for gauging a developer's problem-solving skills should assess the entire journey - from grasping the problem to delivering a working solution. Here's what to focus on:

- Problem Identification: Does the candidate clearly define the problem, outline its constraints, and establish success criteria?

- Planning and Approach: Can they break the problem into smaller, manageable parts and outline a logical plan to solve it?

- Technical Execution: Is their solution accurate, efficient, and easy to understand, employing appropriate algorithms and data structures?

- Debugging and Testing: Are they capable of identifying and resolving errors while validating their solution with tests, including edge cases?

- Code Quality: Does their code adhere to best practices in terms of readability, modularity, and maintainability?

- Communication: Can they effectively explain their thought process and collaborate when needed?

Using this rubric within daily.dev Recruiter allows recruiters to consistently evaluate candidates across these dimensions, ensuring a thorough and balanced assessment of their technical and problem-solving skills.

Why are open-ended problems effective for assessing a developer's problem-solving skills?

Open-ended problems are a fantastic way to assess a developer's problem-solving abilities because they go beyond seeking a single correct answer. These scenarios push candidates to think critically, define the problem clearly, and break it into manageable pieces. Often, they also require making logical assumptions - just like the kinds of challenges developers face in real-world projects, where ambiguity is common.

When candidates explain their thought process, they reveal how they organize their reasoning, evaluate trade-offs, and explore alternative solutions. This gives recruiters a deeper understanding of the candidate’s analytical mindset, flexibility, and ability to think creatively - qualities that extend far beyond technical coding skills. Open-ended problems provide a window into how developers tackle challenges, making them an essential tool in technical interviews.

.png)