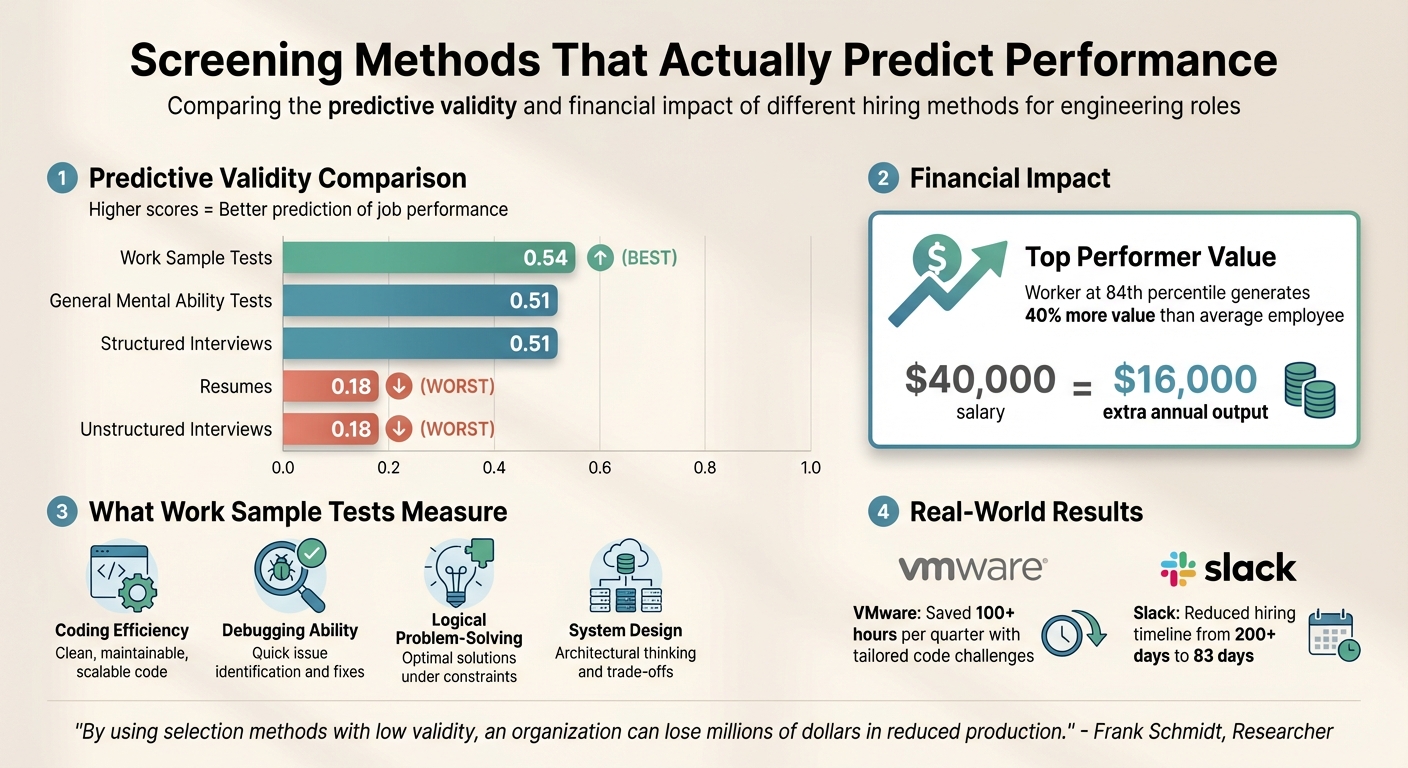

Hiring engineers? Start with smarter screening. Resumes and unstructured interviews don’t predict job success. Research shows resumes have a predictive validity of just 0.18, while work sample tests score much higher at 0.54. The solution? Use targeted screening questions that evaluate practical skills like debugging, coding efficiency, and system design.

Here’s why this approach works:

- Work sample tests mimic job tasks, offering better insights into a candidate’s abilities.

- Companies like Slack and VMware reduced hiring time and improved outcomes by replacing traditional methods with tailored coding challenges.

- Strong screening questions assess skills like algorithmic thinking, debugging, and scalability.

Replace generic questions with tasks reflecting actual work, such as:

- Debugging existing code.

- Designing systems for scalability.

- Solving layered technical problems.

Behavioral questions also matter. They reveal how candidates handle challenges, work with teams, and solve real problems under pressure. For example:

- "Describe a time you optimized a slow feature."

- "Walk me through your worst production issue."

Key takeaway: Combine technical and behavioral questions for a more predictive hiring process. Tools like daily.dev Recruiter can help streamline this by connecting you with pre-qualified candidates and automating assessments.

::: @figure  {Predictive Validity of Hiring Methods: Work Sample Tests vs Traditional Screening}

{Predictive Validity of Hiring Methods: Work Sample Tests vs Traditional Screening}

How Screening Questions Predict Engineering Performance

Effective screening questions succeed because they focus on practical, job-relevant skills. Instead of quizzing candidates on obscure algorithms or trivia, these questions evaluate the core abilities engineers rely on daily: writing clean, efficient code, debugging effectively, and solving realistic problems under constraints.

Research backs this up. Work sample tests, which mimic actual job tasks, show a predictive validity of 0.54 - a strong indicator of future job performance. General mental ability tests and structured interviews each score 0.51, while unstructured interviews lag far behind at 0.18 . The financial difference is striking: a top-performing worker at the 84th percentile generates 40% more value than an average employee. For a $40,000 salary, that’s an extra $16,000 in annual output .

"The correlation between who did really well in the interview process and who performs really well at work is really weak... Interviewing should be thought of as information gathering. You should consciously design the process to be the most predictive of future job performance." - Erik Bernhardsson, CTO, Better.com

The key to effective screening lies in aligning questions with measurable performance outcomes. These include assessing algorithmic complexity (does the solution meet optimal Big O requirements?), program validity (does it handle all test cases correctly?), memory consumption, and debugging efficiency (can candidates identify issues without overly relying on print statements?) .

Key Performance Indicators to Evaluate

Strong screening questions target four critical areas that predict engineering success:

- Coding efficiency: This measures whether candidates write clean, maintainable, and scalable code - not just code that works, but code that performs well under real-world conditions.

- Debugging ability: Engineers spend significant time troubleshooting, so testing how quickly candidates can identify and fix issues in existing code is crucial. This skill directly correlates with their ability to handle unexpected challenges.

- Logical problem-solving: The best questions challenge candidates to think deeply. For example, start with a straightforward problem, then add layers like technical constraints (e.g., scaling for different regions) or business goals (e.g., ensuring 99.99% uptime) . This approach helps distinguish between those who deliver optimal solutions, those who solve problems suboptimally, and those who struggle altogether .

- System design proficiency: For senior roles, asking about architectural decisions - such as designing a service to handle a million events per minute - offers insight into their ability to balance technical trade-offs and business needs .

These metrics can often be measured programmatically. For instance, you can track algorithmic complexity, execution time, and memory usage automatically . This removes interviewer bias and ensures consistency across candidates. Other indicators, like familiarity with CI/CD pipelines or debugging workflows, also provide valuable insights .

Why Standard Screening Methods Fall Short

Traditional screening methods often fail because they focus on the wrong skills. Common questions like FizzBuzz have become so overused that candidates memorize answers, bypassing the need for real problem-solving . Similarly, brain teasers and whiteboard coding tests assess stress tolerance and syntax recall rather than practical engineering abilities - ignoring the fact that engineers typically work with IDEs, documentation, and debugging tools .

The statistics highlight the issue: around 33% of candidates fail simple three-line code-fix tasks, even when their resumes boast advanced experience . Worse, technical jargon like "state machine" or "dependency injection" can alienate experienced engineers who may lack recent academic terminology. This is particularly problematic given that only 40% of software engineers hold formal computer science degrees .

Unstructured interviews add to the problem. Without consistent questions or scoring rubrics, comparisons between candidates become unfair and biased. Shockingly, 10% of answers in company question banks are incorrect, leading to the rejection of highly qualified candidates who actually solved problems correctly .

The financial impact of these flawed methods is massive. Companies using low-validity screening approaches lose millions in reduced productivity, putting themselves at a competitive disadvantage . For example, Zapier improved their hiring outcomes by replacing traditional whiteboard interviews with a 4-hour take-home coding challenge. This approach, which simulated real work environments using GitHub automation, provided far more predictive data .

"By using selection methods with low validity, an organization can lose millions of dollars in reduced production. In a competitive world, these organizations are unnecessarily creating a competitive disadvantage for themselves." - Frank Schmidt, Author/Researcher, The Validity and Utility of Selection Methods in Personnel Psychology

Writing Effective Technical Screening Questions

Technical screening questions should reflect the actual work engineers perform. Instead of focusing on trivia or abstract puzzles, these questions should assess how candidates handle real-world tasks like debugging production code, refactoring legacy systems, or reviewing pull requests. This approach not only provides a better understanding of a candidate's skills but also keeps the process engaging with practical scenarios.

When designing these questions, consider layering complexity. Start with a straightforward problem, then introduce additional constraints to challenge experienced candidates. For instance, you could begin with "Write a function to find the maximum path sum in a binary tree", and follow up with "Optimize it for handling very large trees" or "Account for negative node values." This method allows junior engineers to demonstrate their foundational skills while giving senior candidates a chance to showcase advanced problem-solving.

Before rolling out questions to candidates, test them internally with your team. This ensures clarity, appropriate difficulty, and reduces errors in the answer key - an issue that can lead to rejecting qualified candidates unnecessarily.

Allow candidates to choose their preferred programming language unless the role requires expertise in a specific one. Strong engineers can adapt to new stacks quickly, and forcing them to use an unfamiliar syntax during a high-pressure interview could result in false negatives. Focus on evaluating their logic and problem-solving abilities rather than their ability to recall exact syntax.

"It's usually fine to let a candidate make up library functions that are 'close' to what the real library function is if they momentarily forget specific library functions - but equally, inventing 'magical' library functions that solve too much of the problem is not a good indicator." – Holloway Guide

To ensure fairness and consistency, create clear evaluation rubrics before interviews. Define what makes a response strong, average, or weak. For automated screenings, you might measure algorithmic complexity by setting execution time limits that favor optimal solutions like O(N) or O(log N). These principles guide the development of targeted questions across key categories.

Core Coding Questions for All Roles

Core coding questions should assess skills every engineer needs, regardless of their seniority. These questions evaluate coding efficiency, data structure knowledge, and algorithmic thinking.

- Binary Tree Path Sum: Ask candidates to find the maximum path sum between any two nodes in a binary tree. This tests their understanding of tree traversal, recursion, and handling edge cases.

- Data Structure Comparison: Have candidates compare arrays and linked lists, explaining their use cases. Look for discussions on memory allocation, performance during insertion or deletion, and cache efficiency.

- Hash Table Collision Handling: Ask candidates to explain how hash tables enable efficient data retrieval and describe strategies for handling collisions, such as chaining or open addressing, along with load management considerations.

- Big-O Application: Challenge candidates to explain Big-O notation and provide an example of how they’ve used it to optimize code. Strong responses should cover performance trade-offs and real-world optimizations.

- Rotating Numbers Problem: Ask candidates to identify all valid 180-degree rotating numbers up to a given limit. This tests their ability to recognize patterns and handle boundary conditions carefully.

A 2018 TestDome study illustrates the value of well-designed core questions. Screening 415 candidates for a .NET Web Developer role using two simple coding problems, only 34% passed the initial filter. Of those, just 20% met the required performance criteria in the follow-up assessment. This highlights how even basic questions can efficiently identify candidates who struggle with foundational skills.

Problem-Solving and Debugging Scenarios

Problem-solving and debugging questions assess logical reasoning, debugging efficiency, and optimization skills - abilities engineers need to solve production issues and improve system performance.

- Word Frequency Optimization: Present a scenario requiring candidates to process a large dataset of words and propose a memory-efficient data structure. Strong candidates might suggest using a trie to take advantage of common prefixes.

- Buggy Code Review: Share a short Python code snippet containing inefficiencies and subtle bugs. Ask candidates to review it and suggest improvements, mimicking real-world code review practices.

- Robot Navigation Algorithm: Challenge candidates to design an algorithm for a room-cleaning robot that can only move forward and turn, without GPS or an initial map. This tests spatial reasoning and state management.

- Large Number Arithmetic: Ask how they would add two strings representing numbers larger than typical integer limits, ensuring they understand low-level arithmetic operations.

- Production Incident Analysis: Request candidates to describe a past critical production issue they resolved, detailing how they diagnosed the problem and implemented a long-term fix. This is particularly useful for assessing candidates with incident management experience.

System Design and Scalability Questions

While coding skills are essential, system design questions help identify senior engineers who excel at architectural thinking and trade-off analysis. These questions should be open-ended, allowing candidates to explore multiple valid solutions.

- High-Traffic Event Service: Ask candidates to design a system capable of handling a high volume of user activity events while ensuring reliable processing of critical transactions.

- DDoS-Resilient Streaming Service: Present the challenge of designing a streaming platform that remains functional during a large-scale DDoS attack. Encourage candidates to discuss both basic and advanced protective measures.

- API Quota Management System: Describe a scenario where candidates must prioritize critical API calls under strict rate limits. Look for solutions involving priority queues or caching.

- Gmail-Scale Search Engine: Challenge candidates to propose a high-level architecture for a search engine handling billions of emails with sub-second query times. Junior candidates might suggest basic full-text indexing, while senior candidates should include advanced techniques like inverted indexes and sharding.

System design questions should align with the following expectations:

| Skill Area | Junior Engineer Expectations | Senior Engineer Expectations |

|---|---|---|

| Problem Solving | Focus on syntax, basic logic, and completing tasks. | Identify sub-problems, consider edge cases, and break down complex systems. |

| Optimization | Provide correct but potentially suboptimal solutions. | Implement optimal solutions with clear discussion of algorithmic complexity. |

| System Design | Understand basic components and interfaces. | Discuss trade-offs, scalability, security, and long-term maintainability. |

Behavioral Questions for Predicting Future Performance

Technical skills are just one piece of the puzzle when hiring. While technical tests evaluate a candidate's current abilities, behavioral questions dig deeper, uncovering how they apply those skills in real-world situations. A developer might write flawless code, but if they struggle to communicate with stakeholders or panic under production pressure, they might not be the right fit for your team. Behavioral questions help you understand how candidates handle challenges, resolve conflicts, learn from mistakes, and adapt to unexpected changes.

Why do these questions matter so much? Because past behavior is a strong indicator of future performance. Unlike generic questions about strengths or weaknesses, behavioral questions push candidates to share specific examples from their experience. This makes it harder for them to rely on rehearsed answers and gives you a clearer picture of their work habits. For example, asking how they managed a critical production issue or resolved a disagreement with a product manager can reveal patterns in their decision-making and teamwork.

Structured behavioral interviews - where every candidate answers the same set of questions using a consistent evaluation system - are far more reliable than unstructured conversations. The data backs this up: 92% of talent professionals say soft skills are more important than technical skills . Yet, many hiring processes still focus heavily on coding ability alone.

"The correlation between who did really well in the interview process and who performs really well at work is really weak... You should consciously design the process to be the most predictive of future job performance." – Erik Bernhardsson, CTO, Better.com

The STAR method - Situation, Task, Action, Result - is a proven way to evaluate responses. Strong answers clearly outline the context, objectives, actions taken, and measurable outcomes. For example, "I improved our deployment process" is vague, but explaining the initial problem, steps taken, and quantifiable results provides meaningful insight.

Examples of Effective Behavioral Questions

Here are some examples of behavioral questions that can help you assess a candidate's soft skills and problem-solving abilities:

"Describe a time you optimized a slow feature."

This question evaluates how candidates approach performance challenges. Look for answers that include identifying bottlenecks, weighing optimization strategies, and measuring results, such as reducing API response times."Describe a time you and a stakeholder differed on a technical approach. How did you resolve it?"

This reveals how candidates balance technical priorities with business needs. Strong answers demonstrate active listening, clear communication, and the ability to find compromises."Walk me through your worst production incident."

For senior engineers, this question tests their ability to manage high-pressure situations. Assess how they prioritized tasks, communicated with affected parties, and implemented improvements afterward. Watch out for answers that lack accountability or shift blame."Describe a project where the requirements changed significantly midway through development."

This question highlights adaptability and communication skills. Look for candidates who explain how they reassessed the situation, renegotiated timelines, and kept all stakeholders informed.

To ensure fairness and consistency, use a standardized rubric to score responses. Define what makes an answer poor, mixed, good, or excellent. For instance, in the optimization question, a poor answer might lack any performance metrics, while an excellent one includes profiling methods, trade-off analysis, and specific improvements.

Combining Behavioral and Technical Screening

Relying solely on either behavioral or technical screening won’t give you the full picture. The most effective hiring processes combine both. Start with technical screening to confirm baseline skills, then use behavioral questions to see how candidates apply those skills in practical situations. Together, these methods reveal not just technical expertise but also judgment, adaptability, and communication.

For example, if you're hiring a senior backend engineer, you might pair a system design question with a behavioral one about handling complex technical decisions. The technical question shows their knowledge, while the behavioral question demonstrates how they learn and reflect on their experiences.

Tailor your screening process to reflect the actual responsibilities of the role. If the job involves on-call rotations, ask about incident management. If it requires collaboration with cross-functional teams, explore how they’ve navigated competing priorities. The goal is to minimize the gap between how a candidate performs in an interview and how they'll perform on the job.

With tools like daily.dev Recruiter, you can customize screening questions to match the key attributes needed for success in the first 90 days. For instance, for a DevOps role, you might focus on "incident response", "automation mindset", and "cross-team communication." Craft a couple of behavioral questions for each attribute and evaluate every candidate using the same criteria. This structured approach not only improves hiring accuracy but also enhances the candidate experience - rejected candidates are 35% more satisfied with structured processes compared to unstructured ones .

Hiring engineers?

Connect with developers where they actually hang out. No cold outreach, just real conversations.

Using Predictive Screening in daily.dev Recruiter

daily.dev Recruiter takes predictive screening to the next level by connecting you with developers who are already engaged and pre-qualified. This means you’re not just another email in a crowded inbox - you’re starting from a place of trust. By focusing on developers who are actively participating in the platform, you can streamline your hiring process with tailored questions that match your team's exact needs. This approach leads to better response rates, clearer insights, and candidates who are genuinely interested in your role.

Setting Up Custom Screening Questions

Work closely with your engineering managers to define the specific skills a role requires. For example, a backend engineer might need expertise in database optimization, while a frontend role might prioritize component architecture. This competency blueprint ensures you’re assessing the right skills, not just trendy ones.

"A well-defined competency blueprint is your single source of truth. It prevents you from testing for trendy but irrelevant skills and keeps your entire hiring team aligned." – CloudDevs

daily.dev Recruiter lets you create screening questions based on these competencies. Instead of relying on abstract algorithm puzzles, focus on real-world tasks like debugging legacy code, reviewing code, or building small APIs. These practical challenges reflect the actual work candidates will do.

To ensure fairness, use a standardized scoring rubric - for instance, a 1-5 scale evaluating correctness, code quality, efficiency, and testing. This removes bias and ensures consistency. Before rolling out new questions, have a few internal engineers test them to identify potential issues and confirm the answers are accurate .

Keep take-home projects manageable, ideally between 2–4 hours. Assignments that take 10+ hours can discourage top candidates, leading to drop-offs . Also, use clear, straightforward language in your questions. Avoid overly academic jargon that might alienate experienced engineers with practical expertise .

Analyzing Candidate Responses for Better Hiring

Once candidates complete your screening questions, analyze the results to refine your process. Look at pass/fail rates and drop-off points to identify ambiguous or poorly calibrated questions. If most candidates either ace or fail a question, it’s a sign the question might need adjustment.

The key metric to track is predictive validity - how well a candidate’s screening score correlates with their actual job performance. Compare assessment scores with performance reviews 6–12 months after hiring to see if your screening questions are effective . This iterative approach helps you continuously improve.

daily.dev Recruiter also provides automated technical analysis, scoring candidates on code validity, execution performance, and memory usage . This data-driven approach allows you to compare candidates objectively and identify patterns in how top performers solve problems compared to those who struggle.

For example, between 2013 and 2015, VMware replaced traditional resume screenings with tailored code challenges focused on virtualization. This saved their engineers over 100 hours per quarter and improved the success rate of candidates advancing to onsite interviews . Similarly, Slack introduced a code review test that reduced their hiring timeline for software engineers from over 200 days to just 83 days .

Once you’ve refined your questions using candidate data, take it a step further with systematic A/B testing.

Testing Screening Questions with A/B Testing

A/B testing lets you experiment with different versions of screening questions to see what works best. For instance, you could compare a generic algorithm problem with a domain-specific task to measure which one leads to better completion rates and higher-quality candidates .

The goal is to improve your signal-to-noise ratio, identifying a candidate’s true skills quickly. Research shows that tailored questions lead to nearly 10% higher completion rates compared to generic ones. Industry-specific challenges also tend to engage candidates more effectively than abstract problems .

Run tests by comparing how candidates perform on different question types. Track responses to identify three performance tiers: optimal solutions, correct but suboptimal approaches, and incorrect attempts . This breakdown helps you distinguish exceptional candidates from those who are merely adequate or underqualified.

To keep assessments consistent, use the same grading rubric across all variations. This ensures any differences in results reflect candidate performance, not inconsistencies in scoring . Regularly audit your question bank, as studies show that 10% of company question banks contain errors, which can frustrate top candidates .

"Interviewing should be thought of as information gathering. You should consciously design the process to be the most predictive of future job performance." – Erik Bernhardsson, CTO of Better.com

Zapier offers a great example of this approach. Over two years, they transitioned from no coding tests to live pair-programming sessions and finally to an automated take-home skills test. By automating the setup and using a standardized rubric, they created a fairer, less stressful evaluation process that focused on real-world problem-solving . Adopting a similar iterative approach can help you refine your screening process and improve hiring outcomes.

Conclusion

Traditional hiring methods like resume reviews and unstructured interviews often fall short when it comes to predicting engineering performance. In contrast, work sample tests and real-world simulations provide far more reliable results, helping you identify engineers who are truly prepared to excel.

The benefits of improving your screening methods are substantial. Research highlights that top performers at the 84th percentile can generate $16,000 more annually than average workers in a $40,000 salary role. Over time, this translates into millions of dollars in added productivity .

"In economic terms, the gains from increasing the validity of hiring methods can amount over time to literally millions of dollars." – Frank Schmidt, Researcher

daily.dev Recruiter takes this concept a step further by connecting you with pre-qualified developers who are already active and engaged on the platform. This eliminates the need for cold outreach or outdated profiles, leading to higher response rates, quicker hiring processes, and stronger long-term results.

To refine your hiring process, start by identifying the key skills your role demands. Design questions and tasks that reflect real-world scenarios, use structured evaluations, and continually improve based on data. With tools like daily.dev Recruiter, you can create a hiring process that's faster, fairer, and more effective at predicting success.

FAQs

How do work sample tests help assess engineering candidates more effectively?

Work sample tests stand out as a powerful tool because they replicate the actual tasks engineers handle in their roles. By observing how candidates tackle these hands-on challenges, employers get a better sense of their technical expertise, problem-solving approach, and preparedness for the job.

Unlike theoretical questions or broad assessments, these tests zero in on the exact skills required for the position. This method allows for a more precise evaluation of a candidate's ability to perform effectively in the role.

What skills should technical screening questions evaluate to predict engineering performance?

Technical screening questions should aim to gauge a candidate's essential knowledge, problem-solving skills, and technical proficiency directly aligned with the job. This means delving into their grasp of core concepts like coding, data structures, and algorithms, while also evaluating how they tackle challenging, practical problems.

To create a thorough evaluation, focus on questions that:

- Examine basic knowledge such as programming fundamentals and algorithmic thinking.

- Challenge problem-solving abilities with practical, scenario-based tasks.

- Probe specialized expertise in areas like system design, performance optimization, or specific technologies (e.g., AI or cloud platforms).

Customizing questions to match the role's demands helps you assess how candidates might approach actual engineering challenges, making it easier to spot those with the right skills and mindset for success.

How do behavioral questions help predict an engineer's performance?

Behavioral questions are an effective way to gauge an engineer's potential performance because they delve into how candidates have tackled real-world challenges before. These questions reveal not just technical skills, but also how individuals apply their knowledge to practical problems.

By examining past experiences, interviewers can spot patterns in a candidate's behavior, offering a clearer sense of how they might handle similar situations in the workplace. This method is particularly useful for evaluating both technical expertise and essential soft skills like teamwork and flexibility.

.png)