AI-driven recruitment tools promise speed and efficiency, but they often fall short without human oversight. Here's why:

- AI struggles with nuance: Developer careers are diverse and don't fit neatly into predefined categories. This leads to mismatches and missed opportunities.

- Bias in training data: AI systems inherit biases from historical hiring trends, excluding millions of qualified candidates.

- Generic outreach: Automated messages lack empathy, leading to lower engagement and trust.

- Limited assessment of soft skills: AI can't evaluate traits like communication or team compatibility.

The solution? Treat AI as a copilot. Use it for repetitive tasks like resume screening but rely on humans for personalized outreach, evaluating potential, and building trust. Combining automation with human judgment improves hiring quality and ensures a better candidate experience.

::: @figure  {AI vs Human Recruiting: Key Statistics on Bias, Effectiveness, and Candidate Experience}

{AI vs Human Recruiting: Key Statistics on Bias, Effectiveness, and Candidate Experience}

The Core Problem: Where AI Sourcing Software Falls Short

AI Struggles to Read Developer Profiles Accurately

Developer careers often take unconventional paths. A developer might shift from front-end work to DevOps, contribute to open-source projects, or gain expertise through self-study instead of formal education. AI sourcing tools simplify these complex career journeys into basic scores, overlooking the unique qualities that make candidates stand out . This issue stems from what experts call the "Boolean Clash" - traditional recruiting relies on exact keyword matching, while AI uses statistical probability .

This disconnect leads to serious hiring challenges. AI systems often fail to verify exaggerated skills in resumes, as they rely solely on text-based screening. Genuine expertise typically becomes apparent only through interactive assessments. At the same time, these tools frequently miss highly skilled developers whose backgrounds don’t align with the statistical patterns in their training data. Such inaccuracies not only hinder hiring but also amplify deeper problems rooted in historical biases.

How Biased Training Data Affects Recruitment Results

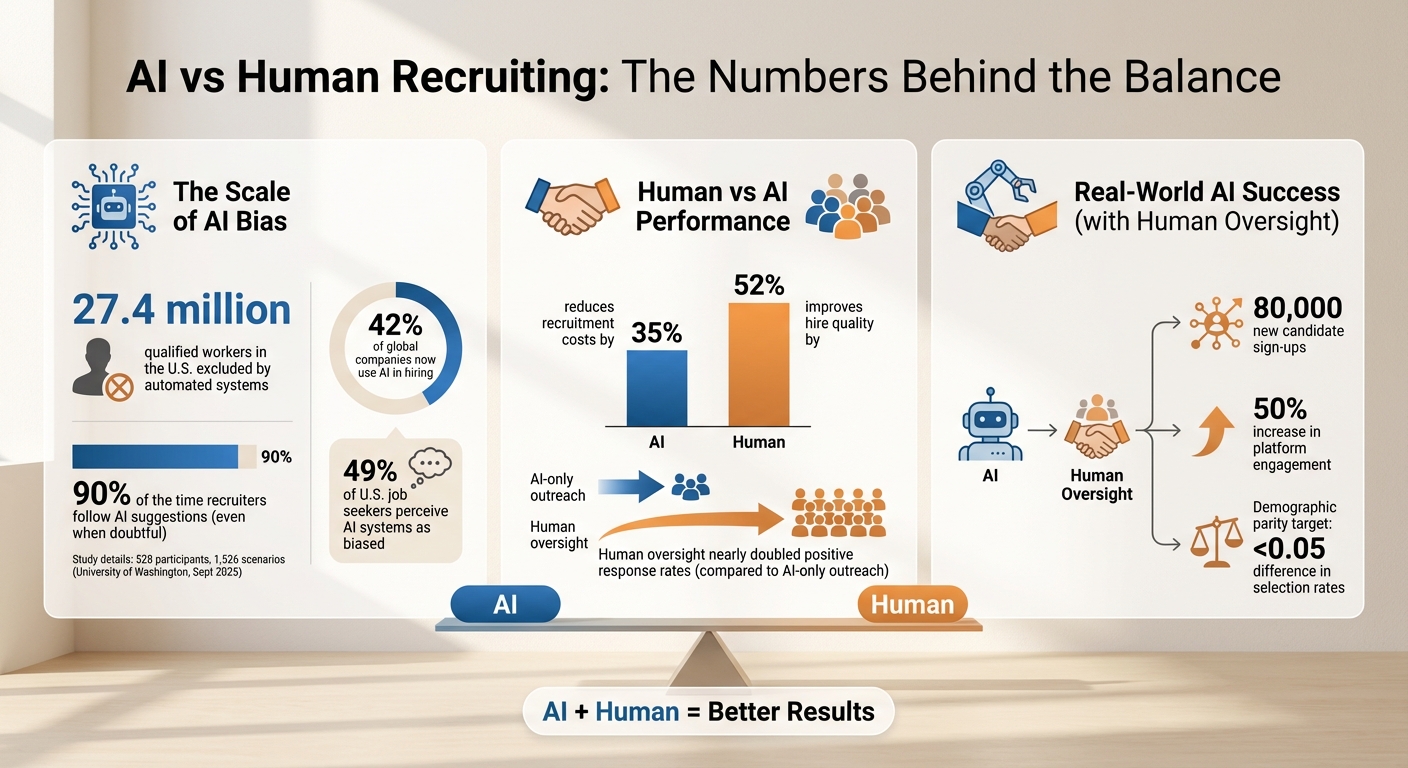

AI systems learn from historical hiring data, which means they inherit the biases embedded in those decisions. If past hiring trends favored certain universities or penalized career gaps, the AI treats these patterns as indicators of success. The impact is staggering: 27.4 million qualified workers in the U.S. are excluded by automated systems simply because they don't fit the AI's expected profile .

One telling example comes from 2018, when a law firm discovered its machine-learning tool flagged "playing lacrosse" and being named 'Jared' as top predictors of job success . This absurd result highlights how AI relies on arbitrary "proxies" for competence - correlations that have no connection to actual developer skills. Worse, human recruiters often trust these biased AI-generated recommendations. Research shows that 90% of the time, recruiters follow these suggestions, even when they doubt their quality . A study by Kyra Wilson at the University of Washington in September 2025, involving 528 participants and 1,526 scenarios, confirmed this troubling trend: recruiters adhered to AI-driven race-based preferences at the same 90% rate, even after completing unconscious bias training . These biases not only shrink the talent pool but also make hiring feel increasingly impersonal.

Generic Outreach Messages Fail to Connect

AI’s shortcomings don’t stop at misreading profiles or perpetuating bias - it also struggles to create meaningful engagement. AI-generated outreach often feels impersonal and lacks the empathy needed to resonate with developers . For developers who receive countless similar messages every week, these communications come across as empty and forgettable. As Joe Fuller, Professor of Management Practice at Harvard Business School, puts it:

"Tech companies are executing a vision, as expressed to them by their clientele, which is flawed... a quest for 'hyperefficiency'" .

When AI can’t explain why it chose to contact a specific developer - because even recruiters don’t understand the AI’s opaque logic - trust erodes . Developers can quickly spot generic, automated messages, leading to lower response rates. The tools companies use send a message about their priorities; fully automated outreach signals to developers that efficiency matters more than fostering a human-centered workplace . This disconnect often drives qualified candidates away, highlighting the need for human oversight to bring back authenticity and trust in the hiring process.

Hiring engineers?

Connect with developers where they actually hang out. No cold outreach, just real conversations.

Why Human Intent Matters in Developer Recruitment

Building Trust with Personalized Messages

Developers can spot generic, AI-generated outreach a mile away. These messages often prioritize efficiency over genuine connection, and the results speak for themselves. Pankaj Khurana, VP Technology & Consulting at Rocket, shared his team's experience with automated messaging:

"The emails looked polished but felt cold. Engagement dropped. We eventually went back to having humans rewrite the AI drafts. That one shift nearly doubled our positive response rate."

What makes the difference? Empathy. Humans can pick up on subtle details in a developer’s profile - like transitioning from front-end work to DevOps - and craft messages that acknowledge these career shifts in a meaningful way. Research backs this up: AI-driven hiring processes are often viewed as less trustworthy, fair, and appealing compared to human-driven approaches . When recruiters take the time to personalize their outreach, developers feel valued as individuals rather than just another entry in a database. This personal touch not only builds trust but also helps recruiters better assess a candidate’s overall fit for a role.

Assessing Soft Skills and Team Compatibility

Trust aside, evaluating a candidate’s interpersonal skills and team compatibility requires a human touch. AI might excel at matching keywords, but it struggles to interpret the nuances of communication and collaboration. For example, while an algorithm can flag a developer with five years of Python experience, it can’t determine if that person’s communication style aligns with your team’s workflow. AI might verify technical expertise, but it often overlooks traits like creativity, adaptability, and teamwork .

Even more concerning, some AI tools attempt to analyze body language or vocal tone, often misinterpreting these cues and potentially filtering out strong candidates who don’t fit rigid algorithmic standards . This is where human oversight becomes essential. Recruiters can evaluate how a developer’s personality and interpersonal style contribute to a healthy team dynamic - something that requires understanding context beyond data points. As one researcher put it, AI lacks the empathy and social intelligence needed to assess complex human traits . The numbers tell the story: while AI can cut recruitment costs by 35%, human-driven methods improve the quality of hires by 52% .

Understanding What Developers Want from Their Careers

Just like personalized outreach builds trust, human insight is key to understanding what developers want from their careers. Resumes and LinkedIn profiles often leave out crucial details about what drives a candidate. For instance, a developer might be looking for growth opportunities, a specific team culture, or the chance to work on projects they find meaningful - none of which an algorithm can infer from a profile.

Human recruiters can uncover these motivations by asking the right questions and reading between the lines. They can also recognize valid reasons for career gaps, like caregiving or volunteering, which don’t signal a loss of skills but rather reflect life priorities . Additionally, humans can differentiate between "must-have" and "nice-to-have" qualifications, understanding when a candidate’s core strengths outweigh minor skill gaps . Jesse Hogan, Founder & CTO of Semantic Recruitment, summed up the frustration developers feel with automated systems:

"You're not a person. You're inventory."

Human intent shifts the focus back to treating candidates as whole individuals, considering their unique goals and experiences rather than reducing them to a checklist of skills .

Combining Human Oversight with AI Tools Like daily.dev Recruiter

Using Warm, Double Opt-In Introductions

The way daily.dev Recruiter works changes the game even before you start outreach. Instead of relying on cold emails, this tool ensures warm and double opt-in introductions, where both the recruiter and the developer agree to connect. This eliminates the frustration of reaching out to candidates who aren’t interested and focuses only on those who are genuinely open to opportunities.

When developers opt in, it’s a clear signal that they’re ready to engage with the right role - not just tolerating another recruiter email. This mutual interest sets a respectful tone from the start, leading to more productive conversations and better engagement. It’s a proactive approach that streamlines the process of finding the right candidates.

Setting Custom Screening Criteria for Better Matches

AI can help identify potential candidates, but it’s human-defined criteria that ensure quality matches. With daily.dev Recruiter, you can add specific screening requirements, such as technical expertise, experience level, location, and even team dynamics. While AI handles the heavy lifting of initial filtering, you remain in control of what truly matters for your role.

Pankaj Khurana from Rocket explains this balance perfectly:

"The AI suggests; the recruiter decides. That balance makes all the difference."

This approach means you’re not just relying on algorithms. Instead, you’re actively curating a candidate pool that aligns with your team’s unique needs.

Prioritizing High-Context Interactions

Think of AI as your copilot. daily.dev Recruiter offers tools like profile summaries and outreach templates, but it’s up to you to review and personalize every interaction. This ensures your communication stays professional and distinctly human, avoiding any robotic tone.

Khurana emphasizes the importance of this approach:

"Don't automate decisions that undermine trust. Rejections, scores, hiring calls? Keep a human in the loop."

Leverage AI for time-consuming tasks like drafting summaries or flagging candidates, but always add your personal touch to final messages. Over time, you can even rate AI suggestions to fine-tune its understanding of your preferences, keeping human judgment firmly at the core of your recruiting strategy.

Practical Strategies to Balance AI and Human Judgment

Running Regular Algorithm Audits

Set up quarterly audits to compare the AI's candidate rankings with your own evaluations. Keep an eye on fairness metrics, such as demographic parity (target a difference of less than 0.05 in selection rates across groups) , and track how often you override AI recommendations. A high override rate might indicate the model needs adjustments .

Take this example: In July 2025, an HR technology company used V2Solutions' "Algorithmic Equity Playbook" to implement fairness-controlled AI. Over a year, they saw 80,000 new candidate sign-ups, a 50% increase in platform engagement, and noticeable progress in diversity metrics . Tools like Microsoft's Fairlearn or IBM's AIF360 can also help you spot and address bias, ensuring issues are caught before they affect your hiring outcomes.

Adding Human Review Before Outreach

Avoid letting AI send messages without human input. In May 2025, Rocket's VP of Technology, Pankaj Khurana, shared that their initial AI-generated emails caused a drop in candidate engagement because the messages felt impersonal. When they introduced human oversight to rewrite AI drafts, their positive response rate nearly doubled .

Set clear thresholds for human intervention. For instance, flag candidates with AI confidence scores below 70% for manual review . Perform "blind" audits where recruiters assess candidates without seeing AI scores, then compare their evaluations to the AI's. This helps identify areas where the algorithm might lack context or make questionable recommendations . These steps create a balanced workflow that blends automation with human expertise.

Building Hybrid Workflows That Work

Combine human oversight with AI-driven systems to keep hiring decisions in your control. A layered recruitment process works well: let AI handle initial filtering and content drafting, while humans oversee the final decisions. For instance, daily.dev Recruiter uses a warm, double opt-in system to surface relevant candidates. While AI assists with scheduling and summaries, humans manage interviews, feedback, and offer extensions .

To refine this process, establish structured feedback loops. Regularly review AI suggestions and provide input, helping the system adapt to your preferences over time. This "machine teaching" approach ensures the AI evolves while preserving human judgment as the ultimate authority .

Conclusion: Better Developer Recruitment Through AI and Human Intent

Throughout this discussion, one thing stands out: while AI-powered tools can scan thousands of profiles and even spot resume inconsistencies, they can’t replace the empathy, judgment, and contextual understanding that recruiters bring to the table. It’s worth noting that 42% of global companies now use AI in hiring, yet 49% of U.S. job seekers perceive these systems as biased . This highlights a critical issue - automation without proper oversight can sometimes create more challenges than it solves.

The key isn’t choosing between AI and human involvement but finding the right balance between the two. AI excels at repetitive tasks like resume screening and parsing, freeing up recruiters to focus on what truly matters: evaluating soft skills, building genuine connections, and understanding developers’ career aspirations. As Pankaj Khurana from Rocket aptly stated:

"The AI suggests; the recruiter decides. That balance makes all the difference" .

Platforms like daily.dev Recruiter embody this principle. Rather than relying on cold outreach or generic messaging, they use warm, double opt-in introductions to connect recruiters with developers who are open to meaningful conversations. AI handles the heavy lifting - matching and scheduling - while recruiters focus on fostering relationships and making informed decisions. Studies show that these warm, opt-in methods consistently outperform traditional approaches, reinforcing the importance of human involvement.

Moving forward, best practices should include regular algorithm audits, manual reviews before outreach, and clear protocols for human intervention when AI confidence is low. These steps ensure a better candidate experience and maintain hiring quality. When AI is treated as a copilot rather than an autopilot, organizations can achieve the speed and efficiency of automation while preserving the trust, precision, and human touch that are essential in tech recruitment. AI is a powerful tool, but it’s not a replacement for the human connection that defines successful hiring.

FAQs

Which recruiting tasks should AI handle vs humans?

AI excels at tasks such as resume screening, keyword matching, and shortlisting - areas where speed and pattern recognition are crucial. On the other hand, humans are better suited for more nuanced responsibilities like evaluating fit within a team, conducting interviews, and making final decisions. These tasks rely on emotional intelligence, judgment, and the ability to build trust - qualities AI simply doesn’t possess. By blending AI's efficiency with human oversight, companies can achieve a balance that promotes fairness, fosters personalized interactions, and leads to stronger hiring results.

How can I spot and reduce AI sourcing bias?

AI-driven sourcing can sometimes amplify existing biases, resulting in unfair hiring practices. To combat this, it's crucial to involve human oversight to carefully review AI-generated outputs and spot any hidden biases. Regular audits of AI models are essential, alongside implementing algorithms designed to reduce bias. Additionally, training recruiters to identify and address potential bias in the hiring process is key. By blending technical solutions, consistent monitoring, and human judgment, companies can work toward creating a hiring process that is fair and inclusive for everyone.

How do I personalize outreach without losing speed?

To make outreach feel personal and effective, zero in on details that matter - like a developer's specific skills, past projects, or unique interests. Mentioning their contributions directly shows you’ve done your homework and helps establish trust right away. Leverage tools that use context-driven data to streamline this process, allowing you to automate without losing that personal touch. Striking this balance between efficiency and genuine connection ensures your outreach feels meaningful and resonates with developers.

.png)